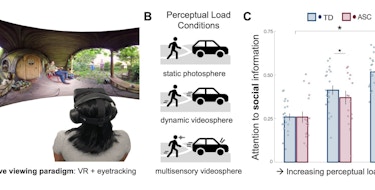

Social communication relies in part on recognizing the emotional state of the speaker. This emotion-rich information is conveyed by cues in the speaker’s voice — known as emotional prosody — including vocal pitch, speech rate, intonation, emphasis and rhythm, which all vary depending on whether the speaker is, for example, happy or sad (Hammerschmidt and Jürgens, J. Voice, 2007). When listening to communication partners during early development, children learn to map these distinct vocal signals onto corresponding emotional states (Blasi et al., Curr. Biol., 2011; Flom and Bahrick, Dev. Psychol., 2007). The ability to recognize emotional prosody has been shown to be important for effective social interaction and forming relationships (Neves et al., R. Soc. Open Sci., 2021; Pell and Kotz, Emot. Rev., 2021). However, the brain systems involved in recognition of emotional prosody are unknown, either in typically developing children or in those with autism spectrum disorder (ASD) who have challenges in social communication. Most of the research in this area has been done in adults and yielded inconsistent results. In a recent study supported in part by a SFARI Research Award, SFARI Investigator Vinod Menon and his colleagues used event-related functional magnetic resonance imaging (fMRI) to identify the precise brain systems that underlie emotional prosody processing and their relation to social abilities in children (Leipold et al., Cereb. Cortex, 2022).

Menon and colleagues measured neural responses in typically developing children aged 7–12 years while listening to emotional prosody versus emotionally neutral speech, with a focus on specific subregions of the voice-sensitive auditory cortex. Using a technique called multivariate pattern analysis that is highly sensitive to differential activation patterns in brain imaging and not previously used in fMRI studies of children, the researchers found that the bilateral middle and posterior superior temporal sulcus (STS) divisions of the auditory cortex decode information about emotional prosody. Decoding of emotional prosody in the middle STS was positively correlated with standardized measures of social communication skills; interestingly, more accurate decoding, especially of sad prosody, was predictive of greater social communication abilities. These results have potential implications for the neural basis of deficits in emotional prosody recognition in individuals with ASD.

Reference(s)

Neural decoding of emotional prosody in voice-sensitive auditory cortex predicts social communication abilities in children.

Leipold S., Abrams D.A., Karraker S., Menon V.